At this point, it should be clear that Salesforce AI is capable of great things.

The tools from throughout the Salesforce AI landscape can automate tasks, generate personalized content, and drive smarter business decisions. But behind the scenes of all of these interactions is a powerful system that makes sure your customer data stays safe. And that’s the Einstein Trust Layer.

For this final installment in our Salesforce AI series, we’re focusing on how Salesforce delivers trusted AI at scale, and why this foundational layer is so important for your successful AI adoption.

What is the Einstein Trust Layer?

The Einstein Trust Layer is Salesforce’s built-in, secure AI architecture intended to protect your most sensitive data as you implement all of these AI tools. It combines technical guardrails, privacy controls, and policy enforcement to help companies benefit from AI, without putting any customer or company data at risk.

If you’re experimenting with prompt-based flows, deploying AI agents, or generating personalized customer communications, the Einstein Trust Layer makes sure it’s all done securely, compliantly, and transparently.

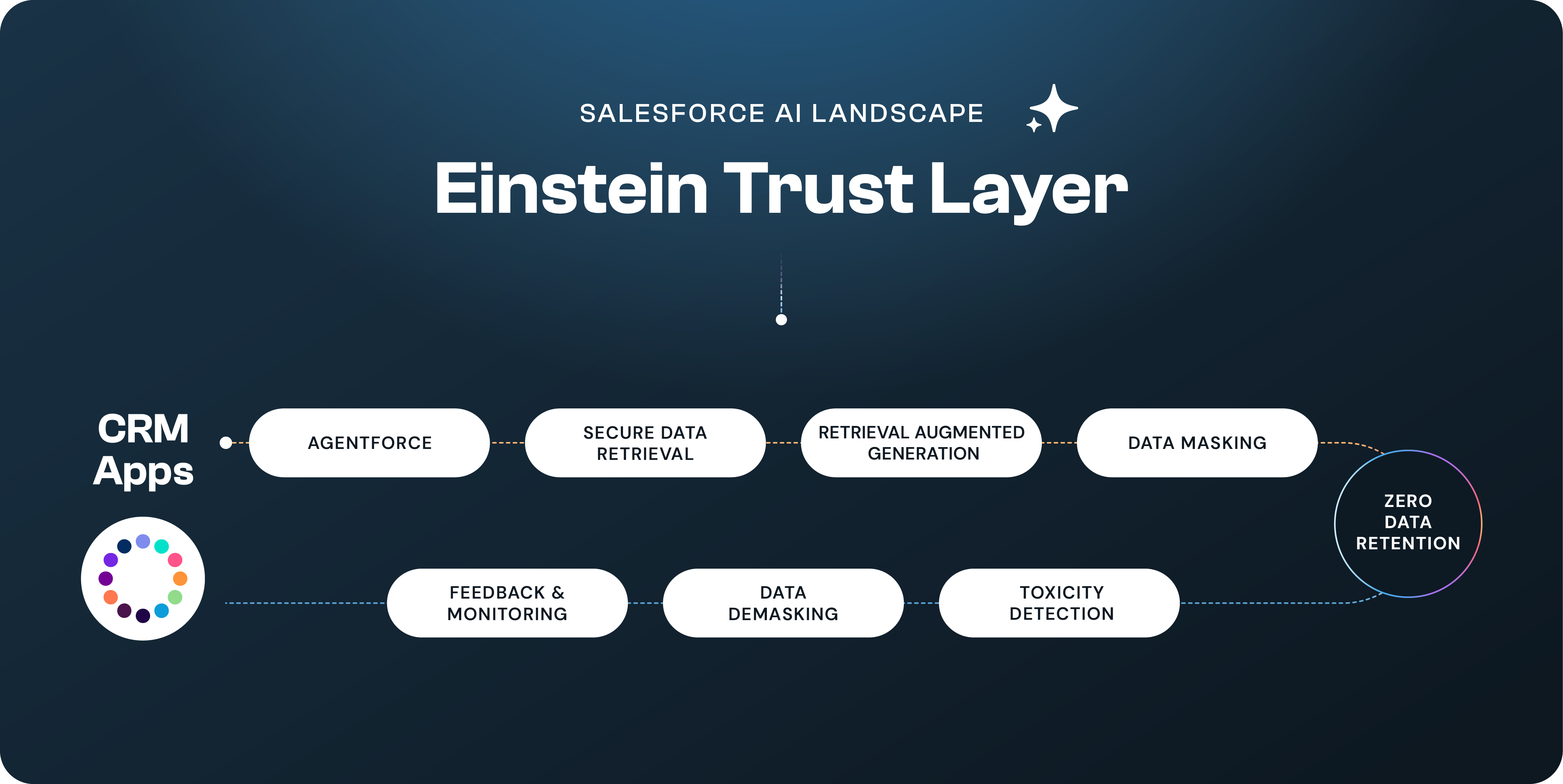

Consider the following features of the Einstein Trust Layer:

- Secure Data Retrieval and Dynamic GroundingThe Trust Layer ensures that only the data each user is allowed to access is used to inform AI outputs ensuring personalized results without exposing sensitive info.

- Data MaskingAny sensitive information, like customer names or IDs, is automatically detected and masked before it ever reaches the LLM.

- Zero RetentionYour company’s data is never stored, viewed, or used for training by third-party LLMs like OpenAI. So, you can rest assured that you’ll stay in control of your data at all times.

- Toxicity DetectionEach AI output is scored for toxicity and logged, helping your business monitor and reduce harmful or inappropriate responses.

- Audit TrailAll your AI activity, including prompts, responses, and risk scores, is logged in Data 360. This gives you complete visibility and control over how AI is being used.

How We See It

At Modelit, we see the Einstein Trust Layer as perhaps the most important aspect of the Salesforce AI landscape. While the exciting tools and features get the most attention, it’s the Trust Layer that makes all of it viable for enterprise use.

Security and transparency are built right into the system. That’s essential when you’re dealing with regulated industries or handling large volumes of customer data. For our clients, knowing that no data is stored or used without consent, and that every action is auditable, is a major reason they feel confident exploring AI solutions.

Summary

From AI-generated emails and lead research to customer-facing agents and AI-powered shopping experiences, Salesforce has launched a bold vision for business AI. And we’re sure this landscape will only continue to grow and evolve from here.

With the Einstein Trust Layer powering every AI interaction, Salesforce gives businesses a secure way to experiment, innovate, and scale with AI, without sacrificing control, visibility, or trust.

Thanks for following along in our Salesforce AI series.

If you’re ready to take the next step with these tools, let’s talk about what it could look like for your business.